AI in Software Testing: The Game-Changer for Modern QA Teams

Anywhere and everywhere – AI is on the rise! – Software testing is no different with AI growing by leaps and bounds. With companies pushing to release better quality software, quicker, legacy QA methodologies are struggling to keep up with today’s development cycles. Manual testing is, of course, a robust process, but it is inherently time-consuming, labor-intensive and error-prone. Test automation has helped to lessen the burden on manual testing, but is limited by script brittleness and maintenance.

Here, AI-based testing presents itself as a progressive space, underpinned by machine learning (ML), natural language processing (NLP), and predictive analytics to revolutionize defect detection, test optimization, and user experience validation. With AI powering testing frameworks, QA teams can attain new heights of effectiveness, precision, and versatility. This paper explores the theoretical basis of AI in software testing, discusses its development, principles, merits, dilemmas and destination.

The Journey of Software Testing: Manual to AI-Driven

Manual Testing Drawbacks

Manual Testing is the oldest type of testing, where a company employs people to test new software before it enters the marketplace using predefined test cases, but without automation. Though this method gives the possibility to test the functionality against crawlers, detect the bug in an intuitive manner but it is inherently limited in terms of scalability and repeatability. Having humans test the applications introduces fatigue, oversight and inconsistency, especially on the larger applications which entail significant amounts of regression testing. Manual testing also has difficulty keeping pace with the rapid iteration cycles of Agile and DevOps approaches.

Test Automation on the Rise

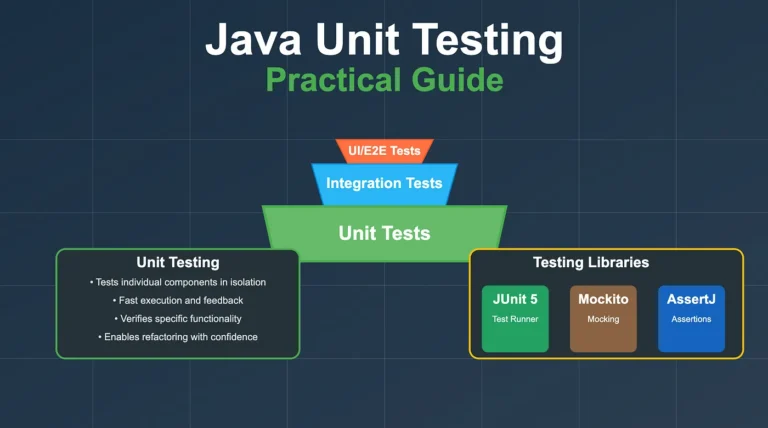

The invention of test automation was a big step forward, allowing repetitive test cases to run via scripted tools like Selenium, Appium, or JUnit. Automation eliminated human involvement in the process, sped up test cycles and increased reliability. However, the traditional automation frameworks are constrained by the fact that they are based on static scripts that need to be updated manually whenever the application under test changes. This frailty frequently results in bottlenecks in maintenance, especially in an environment with rapid development.

AI as the Next Stage in Evolution

The testing driven by the AI is a change of paradigm, as instead of fixed processes, it introduces adaptive, auto(-re)gressive ones into testing. AI-powered tools (as opposed to traditional automation) use machine learning algorithms to process how applications behave, anticipate points of failure, and automatically adapt test scripts for the change. A number of fundamental AI techniques underpin this ability:

- Supervised Learning: Models are trained on historical test data to recognize patterns and defects.

- Unsupervised Learning: Clustering methods uncover unusual behavior that could represent unknown bugs.

- Reinforcement Learning: AI prefects test strategy over time, when AI learns from its history.

By combining these techniques, AI effectively turns testing from a reactive process to a proactive, intelligent one that evolves along with the software it validates.

AI for Software Testers: The Basics

AI-Based Automated Test Case Generation.

One of the key contributions of AI in software testing is the auto generation of test cases. Classical test case generation: Most of the existing test case generation techniques are human-dependent and not reliable enough as new features are added to the system. AI can address the challenge through analyzing application requirements, user interaction logs and historical defect data to build optimized test scenarios.

Machine learning systems, and especially those involving genetic algorithms and neural networks, can ‘play through’ a near-infinite number of potential user pathways and flag end-cases that a human QA tester may miss. For example, we can apply reinforcement learning to search through states of an application, prioritizing test cases to maximize coverage and re-use testing results as much as possible. Such a process not only optimizes the efficiency of product development but also allows more thorough verification of software and system functionality.

Test Automation for Self-Healing

One of the stickiest issues in test automation is the brittleness of test scripts, which tend to fracture with the slightest change in UI or behavior. AI overcomes this by using self-healing techniques that adapt test scripts based on changes in the application.

Computer vision and AI are subsets, and they allow the testing tools to act like humans, to “see” and interact with an application’s surfaces. Through features like object recognition, pattern matching, AI can decipher how different UI elements can be identified despite the change in underlying attributes like XPath or CSS selectors. This facility reduces the maintenance effort and ensures that the test runs continuously without manual action.

Defect Prediction and Risk Orientation Testing Approaches

AI adds value in defect detection by applying predictive analytics to identify high-risk defects in an application. Based on historical defect data, code complexity metrics, and development trends, machine learning models can predict where bugs are likely to be found.

This means that QA can do risk-based testing and focus their resources on the most critical areas of the software. For instance, AI may focus on testing more the modules whose codes are constantly changing, or those whose past showed higher defect densities. This focused method ensures that the most important defects will be discovered early in the development process, as well as enhancing efficiency.

Visual Testing and AI

Visual confirmation factor is vital for testing of user experience, guaranteeing that applications are rendered properly on various devices and screen sizes. Classical visual testing is based on pixel-wise comparison, which is typically fragile and might have false positives for small rendering differences.

Let’s see how AI-driven visual testing overcomes these shortcomings by utilizing sophisticated image recognition algorithms that are able to recognize intentional design changes versus real defects. With tools such as perceptual diffing and convolutional neural networks (CNNs), AI can do a better job of identifying even slight visual imperfections such as misaligned elements or color mismatches.

NLP in Test Scripting

Here comes the popularity of test automation tools that integrate NLP, making test automation democratic, wherein a non-techie can do test case design. NLP models can parse natural language declarations of test cases from plain English to executable test code.

This approach fills the gap between business logic and technical detail, which helps domain experts to describe their tests without programming. In addition, NLP can be leveraged to parse requirement documents and prototype test scenarios automatically that are directly correlated with development and QA goals.

One such GenAI test assistant agent is KaneAI. KaneAI by LambdaTest is a smart Test AI Agent that allows teams to create, debug, and evolve tests using natural language. It is built from the ground up for high-speed quality engineering teams and integrates seamlessly with its rest of LambdaTest’s offerings around test execution, orchestration and Analysis.

Kane AI Key Features:

- Intelligent test generation – Effortless test creation and evolution through Natural Language (NLP) based instructions.

- Intelligent Test Planner – Automatically generate and automate test steps using high-level objectives.

- Multi-Language Code Export – Convert your automated tests into all major languages and frameworks.

- Sophisticated Testing capabilities – Express sophisticated conditionals and assertions in natural language.

- Smart show-me mode – Convert your action into natural language instructions to generate bullet-proof tests.

AI in Performance Testing

Performance testing checks for what an application does in response to different levels of load, noting where the application shoal down is and poor performance for users. Traditional performance testing requires static scripts to be scripted that emulate realistic user interaction among excessively complex applications.

Performance Testing is improved with AI by creating dynamic load profiles, which simulate real user activities. Machine learning models examine production traffic data to generate realistic test scenarios, which guarantees performance tests are both realistic and exhaustive. AI can also pinpoint issues with the performance deterioration trend, which means you can be proactive and optimise before end users are affected.

Applications of AI in Software Testing: A Conceptual Analysis

From a theoretical point of view, AI is providing various potential benefits to software testing:

Improved Efficiency and Acceleration

Test execution and analysis are sped up thanks to AI, resulting in quicker feedback loops in Agile and DevOps worlds. By enabling automation of mundane activities and improving the test coverage level, AI frees the QA teams to concentrate on high-value tasks, like exploratory and usability testing.

Higher Accuracy and Reliability

Human testers are essentially prone to error, especially at boring or repetitive work. AI removes this variability by leveraging a uniform, data-based validation standard. In addition, AI has the potential to learn from historical results, thereby decreasing the frequency of false positives and false negatives, while ultimately improving the test’s reliability.

RTSA and Adaptability

Testing powered by AI scales without any friction to bigger and more intricate applications. As opposed to manual or scripted automation, AI automation can also learn and evolve with an application, providing always-on validation with minimal upkeep.

Preventing Defects: A Proactive Approach

Conventional testing is primarily reactive, and the emphasis is usually on discovering defects after their occurrence. AI subverts this view of the world by affording us the opportunity to proactively prevent defects using predictive analytics and risk analytics. AI allows teams to proactively prevent problems before they occur, minimizing risk early in the development lifecycle.

Dilemmas and Theoretical Bounds in Testing of AI

But while promising, AI-driven testing is not without its challenges:

Quality Training Data Dependence

AI models need lots of high-quality data to learn from. Suboptimal coverage for testing and wrong predictions that affect the results may derive from insufficient or biased training data. Having representative data available is a necessary condition for making AI-driven testing work in practice.

Interpretable/Transparent nature

Most AI models, especially those that use deep learning, are “black boxes,” and so it is difficult to know how they think. Such inability to interpret the predictions is not acceptable in testing in which traceability and accountability are critical.

Integration with Existing Workflows

Introducing AI to testing is a huge leap for our current QA processes and toolchains. It may be difficult to find a group of people skilled in new technology, especially if people are used to doing things differently in the past, which could require a lot of training and preparation for change.

Ethical and Security Issues

The application of AI raises ethical concerns in testing in areas such as data privacy and algorithmic bias. Furthermore, AI systems need to be thoroughly analyzed for their lack of new vulnerabilities and unintended effects.

What is the Future of AI in Software Testing?

AI-driven software testing trends you need to know in 2025.

Autonomous QA Systems

Could AI systems one day become fully autonomous, responsible for designing, executing, and maintaining test suites without any human involvement? Such systems would receive ever-fresh data and, as they did, would adapt their tactics to changing application demands.

AI-Augmented Human-Testing

AI won’t so much replace human testers as become a collaborator, enhancing human knowledge with data-driven analysis. Such a mutualistic relationship could lead to more creative and ultimately better testing strategies.

Security and Compliance Testing Expansion

The ability of AI to process large data sets and identify exceptions makes it a natural fit for security testing. The application of the design pattern appears in automatic vulnerability scanning, penetration testing, and compliance checking can be developed in the future.

Self-Learning Test Environments

AI could also be used to create test environments where the devices can learn from their usage in the real world in a manner that was never before possible. These environments would automatically adapt test parameters with respect to user behavior for a more representative validation.

Conclusion

The application of AI in software testing marks a new way of thinking and performing quality assurance. Enabling machine learning, natural language processing, and predictive analytics, AI turns testing from a manual, reactive process into an intelligent, proactive mechanism. Despite remaining challenges like interpretability and data dependency, the theoretical and practical advantages of AI-based testing are clear.

AI is still rapidly developing, and QA teams need to keep up with the curve, implementing this new model and enabling their skills and workflows to work toward full intelligent testing. The future of software testing is in the symbiotic relationship between humans and AI, making software testing faster, more robust and more innovative.